- Home

- About

- Consulting

- Services

- Minfy Labs

- Industries

- Resonances

- Careers

- Contact

Main cost for any distribution utility is the cost of power (80% of the total cost*), since power needs to be managed in real time, means utility need to consume all that they have purchased. In case of deviation which is either in form of a surplus or deficit, the utility has an option of buying or selling in the market- either day ahead or during the day in the real time market

Also, the grid stability requires that states schedule only the quantum they can consume else there is risk of grid failure and to ensure grid stability the regional grid imposes a deviation settlement penalty on the concerned state.

In case of failure to accurately predict the demand for next day or for next few hours there is a significant commercial impact on the states, which is estimated to be in the range of 0.5-2% *of their power purchase cost. Power purchase cost varies from state to state but can be estimated to be in the range of INR 100 billion to INR 300 billion* for major states in the country. The uncertainty is going to be higher as the share of renewable energy in the energy mix increase, due to the intermittent nature of its supply. The generation of the RE energy also needs to be forecasted to accurately predict the supply.

Time series forecasting means to predict the future value of an item over a period. With Minfy’s AI capabilities, a machine learning solution was proposed, by leveraging the power of AWS’s AI services like Amazon SageMaker and Amazon Forecast.

Minfy demonstrated with a proof of concept, that using historical electricity demand data, we can build, train and deploy a machine learning model to produce forecast with accurate results.

The historical demand data is first uploaded to a S3 bucket. Using a Jupyter notebook instance of Amazon SageMaker, the timeseries data which includes four different timeseries, is then prepared for training different machine learning models.

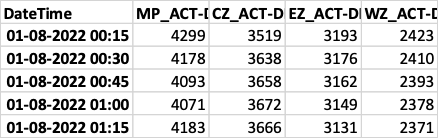

Figure 1 Historical Demand Data

The data pre-processing includes the following steps

1. Managing outliers – Outliers are the data points that significantly differs from the patterns and trends of the other values in the timeseries. It can happen due to reasons such as equipment failure, power failure due to electrical faults, human error, etc. these outliers can hinder the learning process of our machine learning models so it is needed to change the values of these data points. Machine learning techniques such as forward filling, backward filling, polynomial interpolation, etc are used to change the value of these outliers

2. Data Analysis – after cleaning the dataset, it is analysed to gain insights about the seasonality, trends and noise in the data. This analysis is crucial to create additional features that will help the machine learning models to learn better

3. Feature creation – with the help of the analysis, new additional features are created and are used as “related time series” by AWS forecasting models.

4. Transforming the dataset – Different machine learning models require data to be in different formats such as csv, jsonlines, etc. In this last step, the dataset is transformed and partitioned into training data and testing data and is ready to be fed to different models

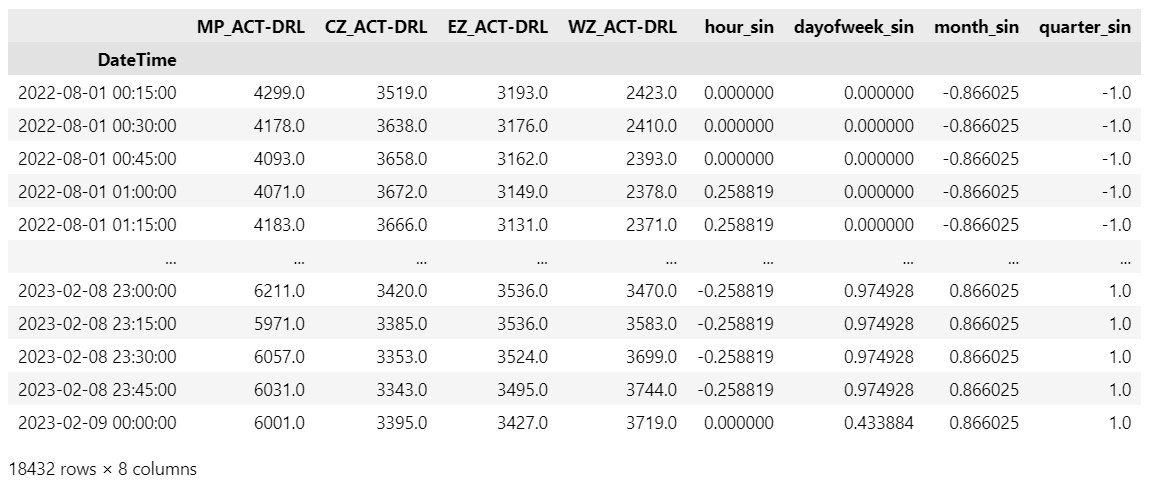

Figure 2 Training Data

We train multiple machine learning models such as Amazon Forecast – AutoML, DeepAR, etc. and test them over a period (rolling window). The performances of these models are compared on multiple parameters such as training time, inferencing time, and the total cost of training. Lastly, as model’s accuracy decreases with time due to change in demand patterns, it is required to train the models on the most recent data points.

An inferencing pipeline is developed for our 1-month trial period. During this 1 month, demand data of previous day was uploaded to s3, it was then processed using the same methodology as above, and then used as “context data” to forecast demand for the next day. As we move forward, the forecasted values are compared with the true values and the results are stored.

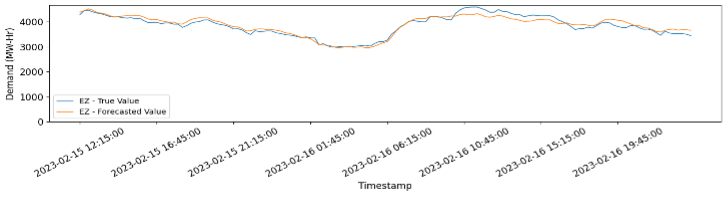

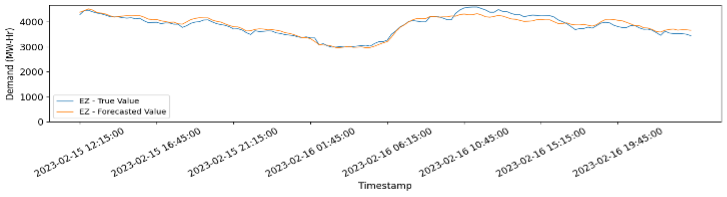

With Minfy’s forecasting solution, PTC saw results of 98% accurate (0.02 MAPE) for intraday forecasting and over 90% (0.1 MAPE) for day-ahead forecasting. The training time for the model was reduced from 4 hours to just 40 minutes. The inferencing time was reduced to a few seconds.

Deviation settlement penalty worth INR 1-6 billion* can be saved, as the forecasted values are within the permissible range (5%) from true values. Also, With AWS’s infrastructure, the solution can be deployed within weeks compared to on-prem setup, which would take months.

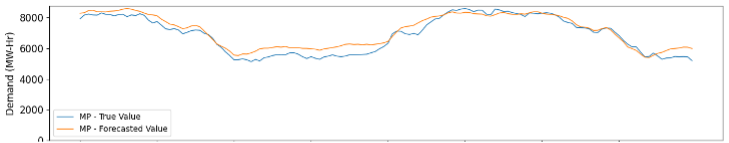

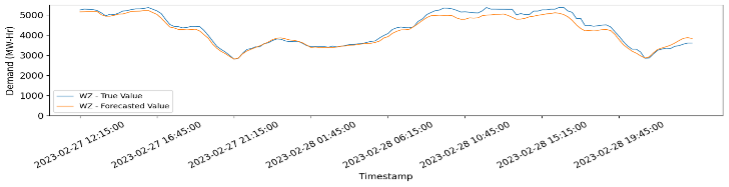

Figure 3 Comparison of True and Forecasted Values for East zone and Central Zone, starting from 15th Feb, 12:15:00 till 18th Feb 00:00

Figure 4 Comparison of True and Forecasted values for West zone and MP, starting from 27th Feb, 12:15:00 till 1st March 00:00.

* Data source – PTC India

PTC is a pioneer in starting a power market in India and undertakes trading activities that include long-term trading of power generated from large power projects, as well as short-term trading arising from supply-and-demand mismatches that inevitably arise in various regions of the country. Since July 2001, when it started trading power on a sustainable basis, PTC has provided optimal value to both buyers and sellers while ensuring optimum usage of resources. PTC has grown from strength to strength, surpassing expectations of growth and has evolved into a Rs.3,496.60 Crore as of March 2020, with a client base that covers all the state utilities of the country, as well as some power utilities in the neighboring countries. https://www.ptcindia.com/

— Author:

Arun Kumar (Head of Technology & Short-Term Power Market, PTC)

Arun Kumar is head of technology and short-term power market at PTC. Arun has over 25 years of experience in the power sector as a corporate leader, equity analyst, and management consultant. Arun previously set up a full-fledged forecasting team, providing demand and renewable-generation forecasting solutions to RLDC, SLDC, DISCOMs, and RE generators. The solution was using a conventional SQL database where the data and weather science team created forecasting algorithms and trained models for generating the outputs. He used the AWS tool for the first time and found the experience very rewarding and cost effective.

Jigar Jain (Project Manager, PTC)

Jigar Jain is project manager at PTC. Jigar is a cloud practitioner and enthusiast. Since 2021, Jigar has been responsible for making major decisions while moving to the cloud and cloud-native architecture at PTC India. Currently, Jigar is actively working on blockchain technologies and sustainability on the cloud.

Rishi Khandelwal (Deeptech Associate Consultant, Minfy)

Rishi Khandelwal is Data scientist at Minfy. He aims to solve challenges in the Energy and Resources Industry. After completing his Masters in AI and Entrepreneurship in 2022, Rishi joined Minfy to help companies leverage the power of data to solve business challenges.

Over the past few years, the world has realized that data is, by far, the most valuable asset for an organization in the digital economy. Much like a financial asset that needs management and governance for appreciation of its value, raw data needs some work to extract actionable insights. These insights can then provide an organization competitive differentiation. Machine learning (ML) is one of the technical approaches to extract insights from data. ML commonly dwells in the realm of predictive analytics, where we attempt to peek into the future using our understanding of the past and present. While this is a quite broad description, there are more specific use cases of ML across industry verticals. In this article, I discuss a use case of ML that have direct impact on cost incurred or revenue generated by an organization. I take the domain of health insurance as example, to explain the intuition and approach using ML techniques. However, the general idea of using ML for risk modeling is pervasive across many other industry verticals and segments.

When an insurer underwrites a contract, it assigns a financial value to the risk covered by the contract, and the insured party pays a premium to offload the risk to the insurer. From the insurer’s perspective, the gross risk value is based on the likelihood of the risk materializing for a certain proportion of the insured entities. This is an oversimplified description but should suffice in context of this use case. So, the insurer calculates the premium such that it can cover its own risk. If the insurer’s stance is too defensive, it’ll charge a high premium. However, that also makes it less competitive. On the other hand, being too aggressive with lower premiums exposes it to higher financial risk. There are many more parameters, including laws and regulations, that the insurer considers for the premium computation. The point is that risk valuation and premium computation are fundamental to financial success of an insurer.

Traditionally, risk modeling has been performed by the actuarial function of insurers. This may involve, among other techniques, statistical analyses, and Monte Carlo simulation. Another approach to risk modeling is predictive analytics using ML. The idea is to predict the likelihood of events happening in future, based on historical data of the same or similar events. Let’s take health insurance as an example. For one individual, the insurer first looks at past data to see what health incidents have occurred for others in the same category. The term ‘category’ refers to a combination of several attributes like age, gender, occupation, lifestyle, existing medical conditions etc. Next, the insurer may consider other correlated future events for that individual. For instance, a person progressing in career may move from a field job to a desk job. This influences how active the person’s lifestyle is, and in turn, influences the risk related to cardiovascular diseases. Environmental factors may also contribute to a change in the risk profile. As an example, consider an asthmatic person moving residence from temperate to polar climate.

Clustering is a ML technique commonly used for customer segmentation. Using this technique, the health insurance underwriter can observe distinct groups in the population of insured entities. This can be a starting point of estimating the risk value, and therefore, the premium, of insurance contracts catering to these groups. This is not a new concept – you may have noticed your health insurance premium climbing steeply when you get to a certain age. It’s possible that the clustering ML model decides to assign you to a different group on basis of an age threshold.

Clustering is an unsupervised ML technique, meaning that it doesn’t need a labeled dataset to train and test. There are other supervised ML algorithms, such as multiclass classification, that a health insurer can use for finer control on risk valuation. Suppose that a customer opts for additional cover on his/her policy for certain ailments and calls out pre-existing medical conditions when requesting a quote for the additional cover. For this use case, the insurer can train a supervised ML model on history data of health insurance policies including claims and settlements. The data should also include attributes related to the insured entity’s demography and pre-existing medical conditions. From this dataset, the ML model learns to recognize patterns related to occurrence of diseases and settlement value of claims. Once this model is trained and tested, given a new insurance proposal, it can then predict the quantified probability of encountering a health claim event for each of the ailments covered in the proposal. Higher the probability, higher the risk, and subsequently the premium quoted by the insurer.

Note that the purpose of the ML models is to quantify relative value of risk. The output of these models could be supplied as input to the actuarial process. In other words, computation of specific financial value of an insurance contract may consider the probability values of future health events. This is significant for other reasons as well, particularly from the perspective of compliance. Insurance is a regulated industry domain, and insurers need appropriate controls in their core processes to ensure fairness, interpretability and explainability.

Amazon SageMaker is a service from AWS that addresses the complete life cycle of ML projects. There are several pre-trained ML solutions as part of SageMaker JumpStart. In context of risk modeling, you can look at two JumpStart solutions related to similar use case – one for credit rating and another for price optimization. Go ahead and explore the JumpStart solutions to know more about data preparation techniques and ML algorithms used to solve for specific business outcomes.

— Author: Anirban De

© 2022 Minfy™. Minfy Technologies. All rights reserved. | Privacy Policy | Terms and Conditions

This website stores cookie on your computer. These cookies are used to collect information about how you interact with our website and allow us to remember you. We use this information in order to improve and customize your browsing experience and for analytics and metrics about our visitors both on this website and other media. To find out more about the cookies we use, see our Privacy Policy. If you decline, your information won’t be tracked when you visit this website. A single cookie will be used in your browser to remember your preference not to be tracked.

Go to Swayam

Go to Swayam