- Home

- About

- Consulting

- Services

- Minfy Labs

- Industries

- Resonances

- Careers

- Contact

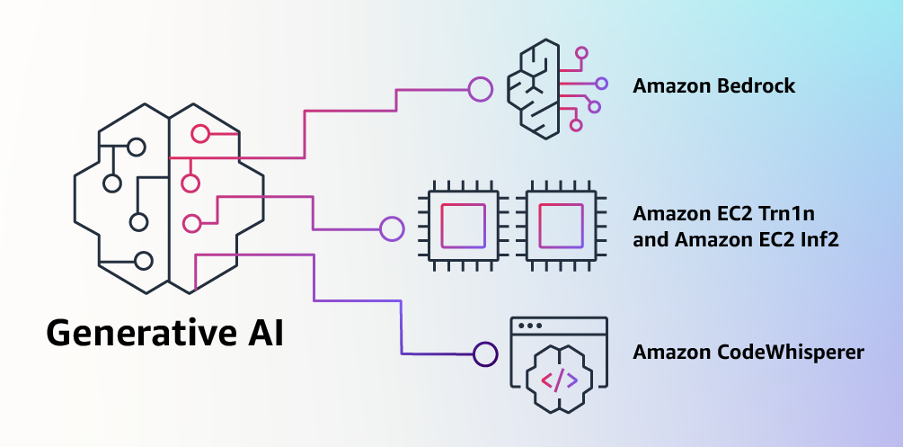

Generative AI, a form of artificial intelligence, has the ability to produce fresh content and concepts such as discussions, narratives, visuals, videos, and music. It relies on extensive pre-training of large models known as foundation models (FMs) using vast amounts of data.

AWS offers generative AI capabilities that empower you to revolutionize your applications, create entirely novel customer experiences, enhance productivity significantly, and drive transformative changes in your business. You have the flexibility to select from a variety of popular FMs or leverage AWS services that integrate generative AI seamlessly, all supported by cost-effective cloud infrastructure designed specifically for generative AI.

Unlike traditional ML models that require gathering labelled data, training multiple models, and deploying them for each specific task, foundation models offer a more efficient approach. With foundation models, there's no need to collect labelled data for every task or train numerous models. Instead, you can utilize the same pre-trained foundation model and adapt it to different tasks.

FM models possess an extensive array of parameters, enabling them to handle a diverse range of tasks and grasp intricate concepts. Moreover, their pre-training involves exposure to vast amounts of internet data, allowing them to acquire a profound understanding of numerous patterns and effectively apply their knowledge across various contexts.

Additionally, foundation models can be customized to perform domain-specific functions that provide unique advantages to your business. This customization process requires only a small portion of the data and computational resources typically needed to train a model from scratch. Customized FMs can make each customer's experience special by representing the company's personality, manner, and offerings in different fields like banking, travel, and healthcare.

Amazon CodeWhisperer is an AI coding companion that incorporates generative AI capabilities to enhance productivity. Furthermore, AWS offers sample solutions that combine their AI services with prominent Foundation Models to facilitate the deployment of popular generative AI use cases like call summarization and question answering.

Achieve optimal price-performance ratio for generative AI by utilizing infrastructure powered by AWS-designed ML chips and NVIDIA GPUs. Efficiently scale your infrastructure to train and deploy Foundation Models with hundreds of billions of parameters while maintaining cost-effectiveness.

AWS offers powerful instances, such as GPU-equipped EC2 instances, that can accelerate the training process. These instances are optimized for machine learning workloads and provide the necessary computational resources to train large language models efficiently.

Consider a variety of FMs offered by AI21 Labs, Anthropic, Stability AI, and Amazon to discover the most suitable model for your specific use case.

Easily tailor FMs for your business using a small set of labelled examples. Rest assured that your data stays secure and confidential as it is encrypted and never leaves your Amazon Virtual Private Cloud (VPC).

Effortlessly incorporate and implement FMs into your applications and workloads on AWS by leveraging the familiar controls and seamless integration with AWS's extensive range of capabilities and services, such as Amazon SageMaker, Jumpstart and Amazon S3.

Amazon Bedrock is a service that helps you access and use AI models created by top AI start-ups and Amazon. It provides an easy way to choose from a variety of models that are suitable for your specific needs. With Bedrock, you can quickly start using these models without worrying about managing servers or infrastructure.

You can also customize the models with your own data, and seamlessly integrate them into your applications using familiar AWS tools like Amazon SageMaker. Bedrock makes it easy to test different models and manage your AI models efficiently at a large scale.

We can select one FM from AI21 Labs, Anthropic, Stability AI, and Amazon to identify the most suitable FM for our specific needs.

Text Generation

Chatbot

Image Generation

Text summarization

Search

Personalization

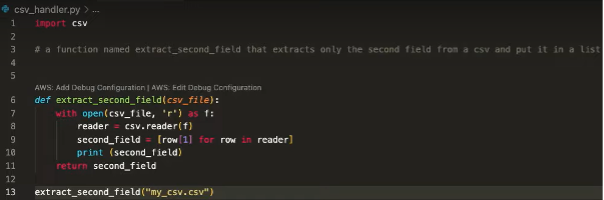

CodeWhisperer leverages the power of state-of-the-art language models, such as GPT-3.5, to provide intelligent suggestions and solutions tailored to your coding needs. From syntax and code structure suggestions to algorithm optimizations and design patterns, CodeWhisperer analyses your code and offers valuable insights to help you write cleaner, more efficient code.

CodeWhisperer is an advanced AI model that has been extensively trained on an immense line of code, enabling it to swiftly provide code suggestions. These suggestions can range from small code snippets to complete functions, and they are generated in real time based on your comments and existing code. By leveraging CodeWhisperer, you can bypass time-consuming coding tasks and expedite the process of working with unfamiliar APIs.

It has the capability to identify and categorize code suggestions that closely resemble open-source training data. This feature enables the retrieval of the respective open-source project's repository URL and license information, facilitating convenient review and attribution.

Check your code for hidden security flaws that are difficult to detect. Receive suggestions on how to fix these issues right away. Follow the recommended security guidelines from organizations like OWASP and adhere to best practices for handling vulnerabilities, including those related to cryptographic libraries and other security measures.

Amazon has been focused on artificial intelligence (AI) and machine learning (ML) for more than 20 years. ML plays a significant role in many of the features and services that Amazon offers to its customers. There are thousands of engineers at Amazon who are dedicated to ML, and it is an important part of Amazon's history, values, and future. Amazon Titan models are created using Amazon's extensive experience in ML, with the goal of making ML accessible to everyone who wants to use it.

Amazon Titan FMs are highly capable models that have been pretrained on extensive datasets, enabling them to excel in various tasks. They can be utilized as they are or privately fine-tuned with your own data, eliminating the need to manually annotate significant amounts of data for a specific task.

Automate natural language tasks such as summarization and text generation

Titan Text is a generative large language model designed to perform tasks like summarization, text generation (such as creating blog posts), classification, open-ended question answering, and information extraction.

Enhance search accuracy and improve personalized recommendations

Titan Embeddings is an LLM that converts text inputs, such as words, phrases, or even longer sections of text, into numerical representations called embeddings. These embeddings capture the semantic meaning of the text. Although Titan Embeddings does not generate text itself, it offers value in applications such as personalization and search. By comparing embeddings, the model can provide responses that are more relevant and contextual compared to simple word matching.

Support responsible use of AI by reducing inappropriate or harmful content

Titan FMs are designed to identify and eliminate harmful content within data, decline inappropriate content within user input, and screen model outputs that may include inappropriate elements like hate speech, profanity, or violence.

Amazon SageMaker JumpStart provides pre-trained, open-source models for a wide range of problem types to help you get started with ML. You can incrementally train and tune these models before deployment. JumpStart also provides solution templates that set up infrastructure for common use cases, and executable example notebooks for ML with Amazon SageMaker.

Amazon SageMaker JumpStart is a valuable resource designed to speed up your machine learning endeavours. It serves as a hub where you can gain access to pre-trained models and foundational models available in the Foundations Model Hub.

These models enable you to carry out various tasks such as article summarization and image generation. The pre-trained models are highly adaptable to suit your specific use cases and can be seamlessly deployed in production using either the user interface or the software development kit (SDK).

It's worth noting that none of your data is utilized in the training of the underlying models. Your data is securely encrypted and remains within the virtual private cloud (VPC), ensuring utmost privacy and confidentiality.

Jumpstart GPT-2 model, facilitates the generation of text resembling human language by leveraging a given prompt. This transformative model proves valuable in automating the writing process and generating fresh sentences. Its applications range from creating content for blogs and social media posts to crafting books.

Belonging to the Generative Pre-Trained Transformer series, GPT-2 served as the precursor to GPT-3. However, the OpenAI ChatGPT application currently relies on the GPT-3 model as its foundation.

AWS Trainium is a specialized machine learning (ML) accelerator developed by AWS for training deep learning models with over 100 billion parameters. Each Amazon Elastic Compute Cloud (EC2) Trn1 instance is equipped with up to 16 AWS Trainium accelerators, providing a cost-effective and high-performance solution for cloud-based deep learning training.

While the adoption of deep learning is growing rapidly, many development teams face budget constraints that limit the extent and frequency of training required to enhance their models and applications. EC2 Trn1 instances powered by Trainium address this challenge by enabling faster training times and delivering up to 50% cost savings compared to similar Amazon EC2 instances.

Trainium has been specifically designed for training natural language processing, computer vision, and recommender models that find applications in a wide range of areas, including text summarization, code generation, question answering, image and video generation, recommendation systems, and fraud detection.

Currently, a significant amount of time and resources are dedicated to training FMs (machine learning models) because most customers are just beginning to implement them in practical applications

However, as FMs become widely deployed in the future, the majority of expenses will be associated with running the models and performing inferences. Unlike training, which occurs periodically, a production system continuously generates predictions or inferences, potentially reaching millions per hour

These inferences must be processed in real-time, necessitating low-latency and high-throughput networking capabilities. A prime example of this is Alexa, which receives millions of requests every minute and contributes to 40% of total compute costs

Amazon recognized the significant impact that running inferences would have on the costs of machine learning in the future. As a result, they made it a priority to invest in chips optimized for inference several years ago

In 2018, they introduced Inferentia, the first specialized chip designed specifically for inference tasks. Inferentia has been instrumental in enabling Amazon to perform trillions of inferences each year, resulting in substantial savings of over a hundred million dollars in capital expenses for companies like Amazon

The achievements that have been witnessed so far are remarkable, and foresee numerous opportunities to continue our innovation efforts, particularly as workloads become larger and more complex with the integration of generative AI into various applications

AWS is currently engaged in the exploration of methods to empower customers in utilizing the LLM’s effectively, enabling them to offer distinctive and exceptional experiences to their own customers while keeping the required effort to a minimum. The goal is to establish a system where customers can make use of foundational models created by AWS partners like Anthropic, Stability AI, and Hugging Face, thereby delivering value to the market.

AWS aims to provide customers with the tools and support necessary to create personalized Generative AI solutions tailored to their individual needs and preferences.

References

https://aws.amazon.com/generative-ai/

https://aws.amazon.com/bedrock/

https://aws.amazon.com/machine-learning/trainium/

https://aws.amazon.com/blogs/machine-learning/deploy-generative-ai-models-from-amazon-sagemaker-jumpstart-using-the-aws-cdk/

https://aws.amazon.com/blogs/machine-learning/build-a-serverless-meeting-summarization-backend-with-large-language-models-on-amazon-sagemaker-jumpstart/

© 2022 Minfy™. Minfy Technologies. All rights reserved. | Privacy Policy | Terms and Conditions

This website stores cookie on your computer. These cookies are used to collect information about how you interact with our website and allow us to remember you. We use this information in order to improve and customize your browsing experience and for analytics and metrics about our visitors both on this website and other media. To find out more about the cookies we use, see our Privacy Policy. If you decline, your information won’t be tracked when you visit this website. A single cookie will be used in your browser to remember your preference not to be tracked.

Go to Swayam

Go to Swayam